In 2021, NFTs were all the rage. A year later, the Metaverse saw breathless media coverage. Now, as those promises enjoy some time in the background, 2023 is being defined by consumer-grade AI, led by OpenAI’s ChatGPT products. Unlike in past years when the FinTech hype machine kicked into overdrive, wary consumers are entertaining the possibility of functional and fast-improving AI tools.

In case you missed it last month, OpenAI’s latest iteration, ChatGPT-4, has shown immense gains over the initial model, both in terms of its capabilities and capacity for critical thinking. The video accompanying its release positions this new class of AI as a possible solution to everything from streamlining math education, to maximizing human potential. But before we embrace this brave new world of increased productivity and equitable calculus, it’s worth noting the ramifications of taking AI-generated content at face value.

If you’re behind on our recent ChatGPT vs. CEX.IO series, Parts One and Two are both live, and highly recommended reading. Otherwise, let’s keep this momentum going.

“They’re not perfect”

In OpenAI’s recent promotional video for ChatGPT-4, different members of the team share their thoughts, dreams, and definitions of the product in its current form. One in particular grabbed our attention:

“This is the place where you just get turbo-charged by these AIs. They’re not perfect, they make mistakes, and so you really need to make sure that you know the work is being done to your level of expectation; but I think that it is fundamentally about amplifying what every person is able to do.” [00:41 – 00:56]

In many ways, this quote perfectly encapsulates the promise and precarity of this moment. On the one hand, information has never been more available with such agility and precision. No longer the creative spigot, human imagination has been repackaged as the unfortunate ceiling in content generation. However, the phrase “amplifying what every person is able to do” casts a wide net of potential human behavior. Namely, that AI will graciously populate the gaps in both our strengths and weaknesses.

This all turns on the suggestion that the user must, “know the work is being done to [their] level of expectation,” a phrase that is putting in overtime as both an endorsement and a disclaimer: yes AI is capable of performing the work, but the quality of the work can vary. Curiously, this puts the onus of accuracy on the user, without letting the admission of this factual slippage diminish the inherent wonder of AI.

We’ve seen social media companies play a similar shell-game when discussing content on their platforms. However, unlike crowd-sourced restaurant recommendations or the unfiltered thoughts of old classmates, AI programs can replicate a guise of authority that, in the wrong hands, could pose serious problems. This runs a spectrum from confidently regurgitating inaccurate information (see our example below), to recreating the image, sound, and likeness of entities across artistic mediums.

As recently as March 20, the Federal Trade Commission published a blog warning of the potential threats that could result from chatbots, deepfakes, and voice clones. From misleading users into believing they were being contacted by friends and family, to circulating misinformation targeting a specific person or group, new applications are continuing to surface. This pivots the thought of “amplifying what every person is able to do” into a sobering new direction.

Stern caveats aside, we put ChatGPT and ChatGPT-4 to the test on content depth and accuracy, by asking them to recall a specific event in Bitcoin’s history. While the exact process and info-sourcing behind their abilities remains a well-kept secret by OpenAI, the results speak for themselves.

Learning from our strengths

As discussed in Part Two of our ChatGPT vs. CEX.IO series, information routed through machine learning and the human brain encounter vastly different processes. Most notably, human thought (when applied appropriately) is more adept at recognizing and assessing the accuracy of factual information. Now that our world is increasingly populated with AI-generated content, it’s worth considering what responsibilities we have when engaging with these tools.

As the burgeoning AI industry currently stands, many of these projects remain black boxes to curious users. Unlike a trusted news outlet or website that imposes editorial standards and fact-checking, AI-generated content is often unverified. Not only are source materials regularly omitted from responses, but answers can vary depending on the level of specificity and detail in the question prompt. Broadly, this means that the same question can yield vastly different responses depending on the context provided by the user. Similar to the gulf between general and specific knowledge, this can proliferate glib or underdeveloped assessments of ideas and historical events.

To help illustrate this point, we asked ChatGPT and GPT-4 to describe an infamous event from Bitcoin’s history: the 184 Billion BTC Bug. First, we posed the question to OpenAI’s original bot, ChatGPT:

Image source: ChatGPT

As you can see, this reply is insufficient. Not only was this a well-documented incident, but there’s a clear disconnect between the information sourced by ChatGPT that’s preventing the AI from arriving at its destination.

Based on its response, the AI is getting stuck on one or two fronts:

1.Its knowledge of Bitcoin’s network architecture is disrupting its ability to adequately discuss anomalies in that same system. Essentially, it could be unable to identify exceptions to established rules.

Or,

2. The prompt is too specific. Since the bug was ultimately isolated and fixed, the bot is technically correct that this event did not come to pass. However, it fails to provide this distinction in its response.

While AI chatbots are often touted as being capable of learning from prior mistakes, it’s unclear to what extent this can be relied on by the community at large. At this time, it appears ChatGPT’s ability to retain and incorporate feedback is limited to the context of each individual chat. Therefore, users should anticipate a certain level of coaching to help guide the tool toward a detailed understanding of each topic before delving into specific questions.

New and improved

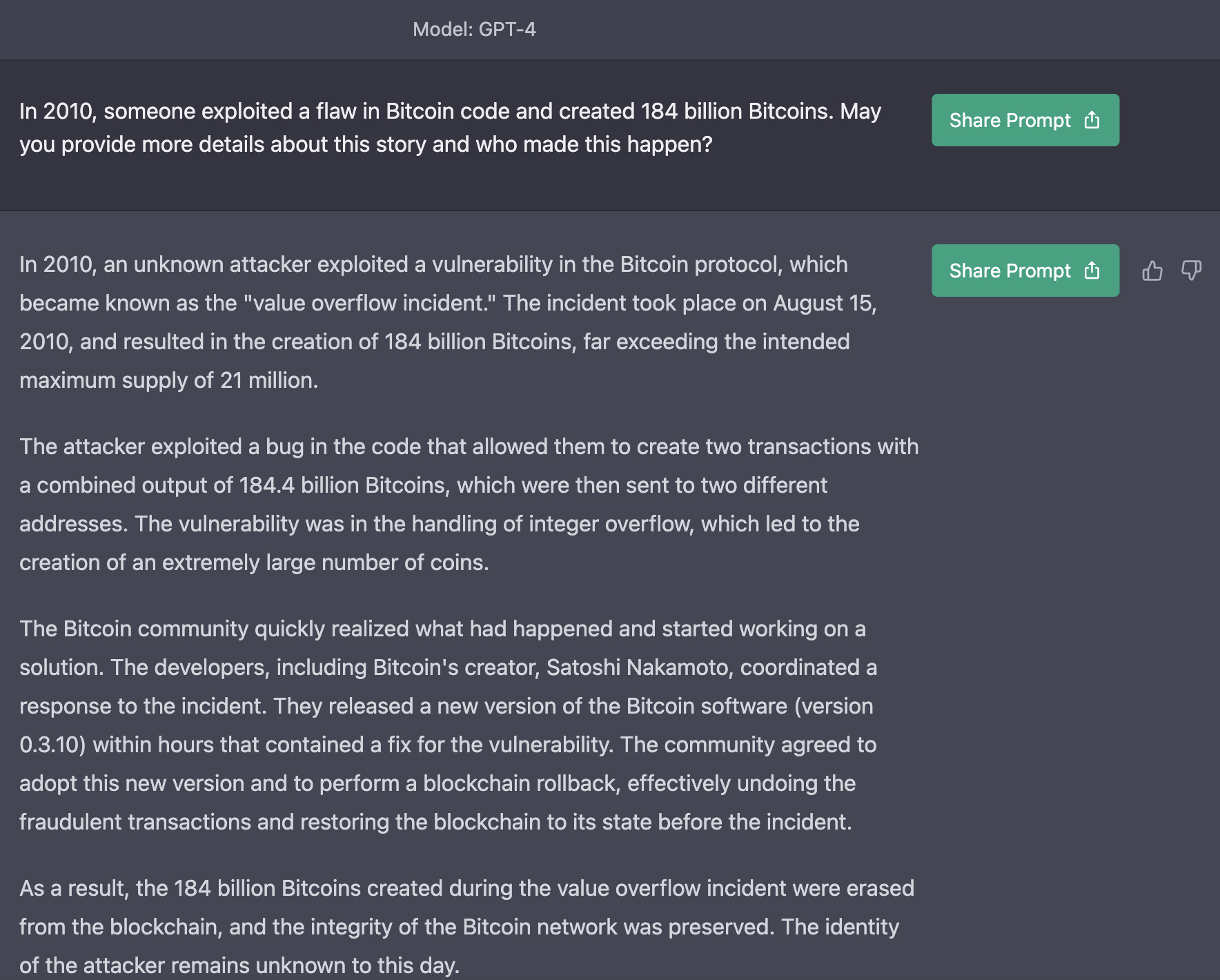

To track the progress of OpenAI’s updated bot, ChatGPT-4, we posed the same question verbatim to see whether these logical mishaps had been resolved in the latest version. It was immediately clear that GPT-4 was able to synthesize and catalog information in a more accurate and coherent manner. Not only were the clunkier, self-aware aspects of the original AI’s speech buffed to a smooth sheen, but its responses provided a much more nuanced understanding of the complex event. See for yourself:

Image source: ChatGPT-4

Unlike its first generation cousin, GPT-4 is more confident and detailed in its answers. An established narrative thread provides the inquirer with firm start and end points to situate the details of the response. In turn, the answer appears both for and from the perspective of a knowledgeable crypto participant. Where the earlier model proffered a shaky-at-best understanding of the industry’s long term development, GPT-4 closes some of the gap between general and specific knowledge with a breezy, matter-of-fact tone. These concrete pieces of information provide entry points to stress test the integrity of a response.

From citing specific dates, to pulling segments from quotes, to referencing technical data, like version update numbers, these are all breadcrumbs to work back from to verify information. When you’ve been around the crypto ecosystem for as long as we have, lived experience and existing knowledge can help separate fact from fiction. But unless you’re 100% certain about the authenticity of a bot’s response, taking those extra moments to crosscheck can mean the difference between being well-informed and falling short.

In fact, during this period of early adoption, consider getting in the habit of double checking any information received through AI-generated services. As our quiz series hopefully demonstrated, ChatGPT’s products are capable of discussing a wide range of topics. However, the source and accuracy of such information is often unknown, or, depending on the vintage, can fall victim to breakdowns in logic or reasoning. That’s why it’s always a good idea to interrogate synthetic content with other, trusted sources.

To once again quote OpenAI’s promotional video, “[Chat]GPT-4 takes what you prompt it with, and just runs with it,” it’s up to the user to differentiate a knowledge sprint from a wild goose chase.

Do’s and Don’ts of ChatGPT

Like any tool, AI chatbots can yield their best results when applied to tasks that fit their wheelhouse. You wouldn’t use a screwdriver to pound a nail for the same reason you wouldn’t (hopefully) rely on a chatbot to write something heartfelt. To highlight some of these differences, we came up with a general framework of dos and don’ts to help guide your application of AI resources.

For a more effective and responsible application, consider using these scenarios to explore the benefits of AI:

- Ask it for feedback on your writing assignments.

- Ask it to help brainstorm ideas for new content or research topics.

- Ask it to explain/define the basics of a question or subject.

On the other hand, some questionable scenarios to consider avoiding include:

- Using the tool to write your assignments for you (which is considered plagiarism)

- Citing the bot as a source of factual information.

- Taking responses at face value without checking them against other sources.

While ChatGPT is indeed fascinating, like any tool, its best applications involve playing to its strengths. There are plenty of opportunities to explore its creative functionality that don’t result in encountering or sharing false or incomplete information. That way, you can use AI as a resource that helps augment or sharpen your skills, without letting it become the author of your content.

When it comes to applying AI to your crypto journey, we’ve demonstrated that these tools can be reliable up to a certain point. We always encourage our community to conduct thorough research and a personal risk assessment, neither of which should be outsourced to a single, unverifiable entity. Therefore, it’s imperative that curious participants and early AI enthusiasts continue to use these resources in tandem with other tools and services. That way, you can corroborate any information before applying it to your trades, and make more informed decisions along the way.

Note: Exchange Plus is currently not available in the U.S. Check the list of supported jurisdictions here.

Disclaimer: Information provided by CEX.IO is not intended to be, nor should it be construed as financial, tax or legal advice. The risk of loss in trading or holding digital assets can be substantial. You should carefully consider whether interacting with, holding, or trading digital assets is suitable for you in light of the risk involved and your financial condition. You should take into consideration your level of experience and seek independent advice if necessary regarding your specific circumstances. CEX.IO is not engaged in the offer, sale, or trading of securities. Please refer to the Terms of Use for more details.